🐙 When Your SFU Becomes an Octopus — Understanding Mediasoup the Fun Way

When I first started building my scalable Zoom clone, I thought I just needed a few Socket.IO events and a WebRTC peer connection. Then I met Mediasoup — and suddenly, I was talking to an octopus with 12 arms.

🎨 The Meme That Says It All

Every developer’s first week trying to understand transports and consumers.

Every developer’s first week trying to understand transports and consumers.

At first glance, WebRTC seems like a simple “browser connects to browser” handshake. But once you add a Selective Forwarding Unit (SFU) — the middleman that handles multiple users’ media streams — things get spicy.

🧠 What’s Actually Going On Here

Each arrow in that image isn’t random — it represents a real network flow that happens during a WebRTC session.

Let’s break it down:

- ICE Candidate Exchange → figuring out how to reach each other (through NATs, firewalls, or Wi-Fi sorcery).

- DTLS/SRTP Setup → encrypting media channels so only you and your peers can see/hear them.

- Producer / Consumer → sending and receiving media streams.

- Transport → the actual connection tunnels Mediasoup creates per direction (uplink and downlink).

- RTP/RTCP Flow → the real audio/video data zipping through.

- DataChannel Binding → for non-media stuff like chat messages or reactions.

Now imagine managing that for hundreds of users — at once. That’s when Mediasoup starts to feel like the multitasking chef in a kitchen full of tangled cables.

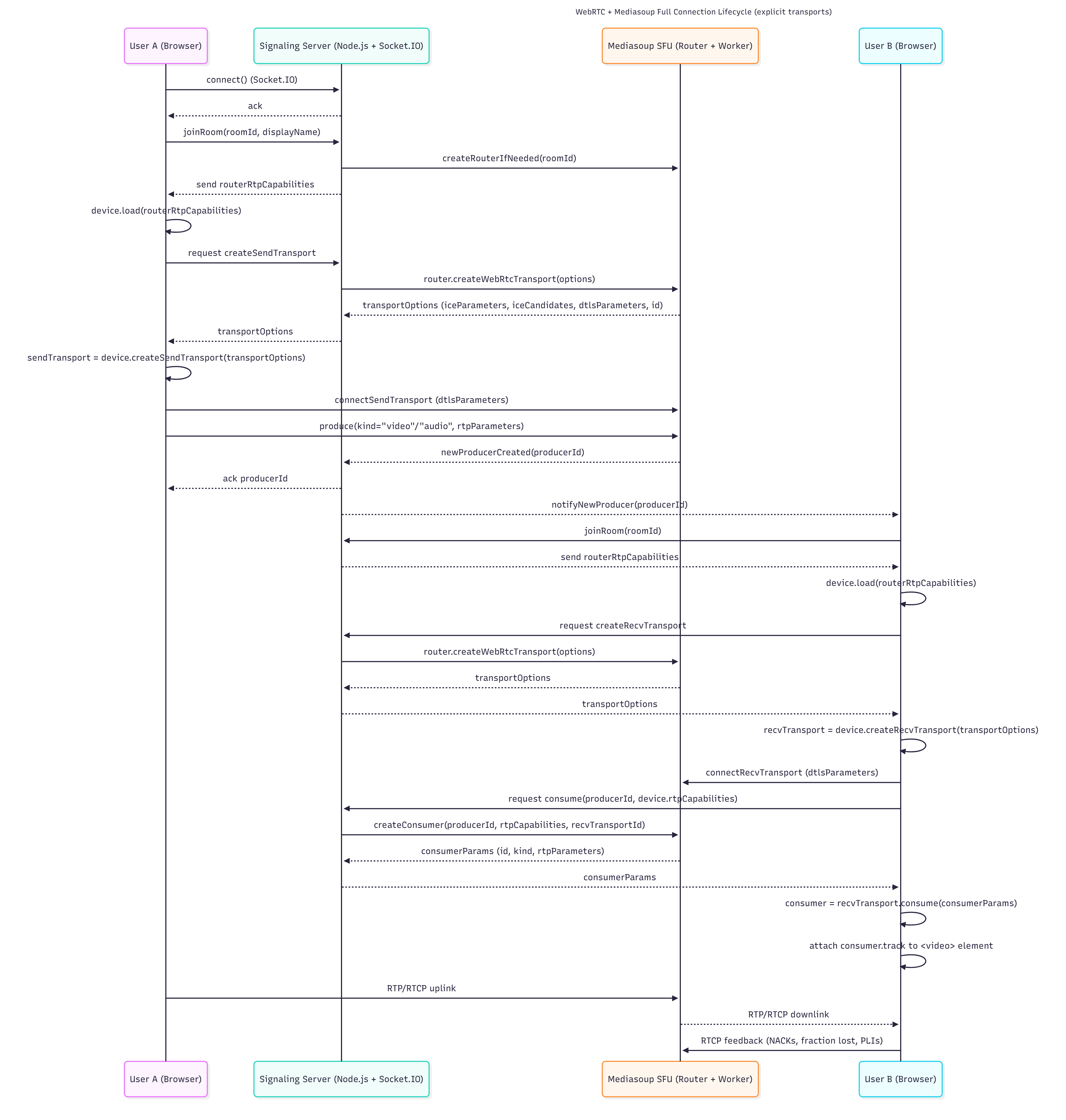

⚙️ The Big Picture — How It Actually Works

Here’s a visualization of the full connection lifecycle:

🧩 Step-by-Step: From Camera to Connection

Let’s break down what really happens when two people join a Mediasoup-powered call.

🎥 1. getUserMedia() — Opening the Camera and Mic

This is where it all starts. The browser asks the user for permission to use their camera and microphone.

Once granted, this stream contains tracks — and each track (audio or video) becomes a Producer later in Mediasoup.

🧭 2. joinRoom() — Saying “I’m Here!”

User A connects to the Signaling Server (built with Node.js + Socket.IO) and announces their intent to join a room.

The signaling server then checks whether a Mediasoup Router exists for that room. If not, it creates one and sends back the RTP Capabilities — a list of supported codecs and formats.

This is how both ends agree on what kind of media they can talk in.

🏗️ 3. Creating Transports — The Highway Builders

WebRTC doesn’t send media directly — it uses transports. A transport is like a secure tunnel carrying your encrypted packets.

There are two kinds:

- Send Transport → for sending your local camera/mic stream.

- Recv Transport → for receiving streams from others.

So both the browser and the SFU create their sides of each transport.

🔐 4. ICE + DTLS Handshake — Finding the Path

This is where WebRTC magic happens.

ICE (Interactive Connectivity Establishment) tries to find the best route between client and server — over LAN, Wi-Fi, or public IP. If you’re behind NAT or firewall, STUN/TURN servers step in to help.

Then, DTLS (Datagram Transport Layer Security) kicks in to encrypt the connection.

Once the handshake succeeds, both sides can safely send encrypted RTP packets.

Think of it like:

“Okay, we’ve found each other through the maze of the internet — now let’s talk securely.”

📡 5. produce() — Sending the Stream to the SFU

Once the send transport is connected, the browser “produces” media — sending audio and video tracks to the SFU.

Each track gets a unique producerId.

The SFU then notifies the signaling server that a new producer is available.

🎧 6. consume() — Receiving Streams

When another user (User B) joins, they’re notified about available producers.

They create their own recvTransport, connect it (via ICE + DTLS), and call consume() to receive the stream.

Mediasoup handles all the complex forwarding internally — so even if 50 users join, each only sends one uplink stream.

That’s the power of an SFU.

🔄 7. RTP/RTCP Flow — The Real-Time Highway

Once producers and consumers are connected, real-time packets start flying:

- RTP carries the actual audio/video frames.

- RTCP sends feedback — like packet loss, jitter, or delay — to keep streams stable.

The SFU routes packets efficiently to all consumers while keeping track of quality.

🧩 Why It’s Complex

Most “Zoom clone” tutorials only show two peers talking. But scaling beyond that takes architecture — not just code.

Mediasoup doesn’t just forward packets; it orchestrates an entire media symphony.

And once you understand the dance between transports, producers, and consumers, you realize — this “octopus” isn’t scary. It’s just very, very good at multitasking.

🌐 GitHub Repository

👉 https://github.com/NeurologiaLogic/scalable-zoom-clone

At the end of the day, Mediasoup isn’t just an SFU — it’s a teacher. It shows you how real-time communication actually works. And yes, sometimes it feels like you’re debugging an octopus — but once you learn which tentacle does what, it’s oddly satisfying.